Bayesian thinking & inference, part 2

Level ∞ - BILL BETTOR

Do you have a friend who likes betting and would like to sharpen his/her action? Help them on their path by sharing the BowTiedBettor Substack. Win and help win!

Welcome Degen Gambler!

Bayes Craze! It’s time for the second part of our Bayesian series, a series which aims to introduce you to the world of Bayesian learning & put you in a Bayesian state of mind, or dare we say, a correct state of mind.

If you haven’t read part 1 yet, begin there.

The concepts presented in this series are relatively tough to grasp and unless you have had a proper amount of training with thinking in conditionals, there’ll be a lot of hidden insights that you simply won’t *see* at first glance. Therefore, take your time with the material. Read. Think. Read again. Or, equivalently: Have a belief. Read. Update your belief.

NHL-learning

In the conclusion of Part 1, two problems were posed. The first, the investigation of a backtest/sequence of bets, was handled in a comprehensive manner in “Bet Sequences, an analysis”. Today we’ll have a deep look at the second problem:

After having read our posts on web scraping, you have just scraped and stored all the NHL data from the previous season. You are now interested in learning as much as possible about it. For example, since you find live odds intriguing, you wonder what the probability of a team winning a game, conditional on taking a lead into the third period, is.

We’ll examine this question on multiple levels.

First, we’ll do the most basic thing: collect data on prior NHL games, look at the subset of games where one team has taken a lead into the third period and from there determine how often those teams have been able to maintain their lead. This will provide us with a simple, fundamental fraction to keep in mind while e.g. betting same game parlays in the NHL.

As soon as we’re done with this initial step, we’ll advance the analysis with an alternative perspective, a Bayesian perspective. By approaching the problem from these two angles, the deficiency & NGMI-level of the former will become apparent. As we’ll see, there can be huge differences between “learning” and *learning*.

Throughout the post we’ll do our best to discuss details to consider when running similar statistical analyses *in real life*. Remember, we’re not here to write papers, we’re here to back our beliefs with real world money. Thus, we cannot afford to overlook or misinterpret important features related to the management & understanding of data.

A trivial conditional measure

Let’s begin with the basics. We’ve fetched the regular season data for the NHL 2022/2023 from what we believe is the official NHL API [EXAMPLE of full game data for the first 2022-2023 game]. Relevant data for our purposes has been stored in the file below.

Python code for this post can be found in this folder in our RandomSubstackMaterial repo on Github.

Collecting the data and performing a quick check for the fraction we’re interested in yields a value of 82.94 % [1 312 games in total, 1 008 of those had one of the teams in front after two periods, 836/1 008 kept the lead & won the game]. Conclusion: absent better information, the probability of a team ending up as winners in a game *given a lead after two periods* is ~ 83 %.

Before we continue, let’s have a look at the different problems with the above approach.

All we’re left with is a number. The world is far too uncertain to reduce all our knowledge into simple numbers.

Numbers give you a false sense of security. Behind every number there's a probability distribution.

One of the beauties with Bayes is that it allows us to quantify our levels of uncertainty, i.e. helps us determine how sure we can be that our numbers [or intervals] are correct or at least approximately so.

What we obtain using this method is a very, very general measure/average. In practice, this won’t help much unless we are able to split our dataset into more specific subsets, with each such subset being a collection of teams/setups possessing similar features.

A huge underdog taking a lead into the third period is *less likely* to win the game than a favourite in the same position. Priors matter. In reality we’d like to condition on more than “team in the lead after first two periods” to actually learn useful stuff.

This leads us to an interesting truth concerning data.

YOU NEVER HAVE BIG DATA. Why? Cause if you do, you should immediately dive deeper into your dataset, find clusters within it [observations with similar features] & repeat your analysis on each one of those clusters. Effectively, in practice you’ll always have to ask yourself “small data questions” or you’re likely doing things wrong.

Bayes comes in handy here since Bayesian inference is more or less made for “small data problems”. And, since all data problems should, if possible, be reduced into small data problems, Bayes must be the way to go!

Note: The amount of data increases the rate at which you’re learning in at least two ways:

More data yields more certainty [variance goes down with more observations] in the “population” estimations [full dataset averages, medians etc.].

More data creates better opportunities to condition on multiple factors at once [understand how the values of quantities differ between subsets/clusters].

Now that we’ve hinted at there being a better way of observing the world, let’s actually try to analyze the data from this somewhat more sophisticated viewpoint. At the end, it will be clear that the results yield superior answers to our inquiries.

The Bayesian perspective

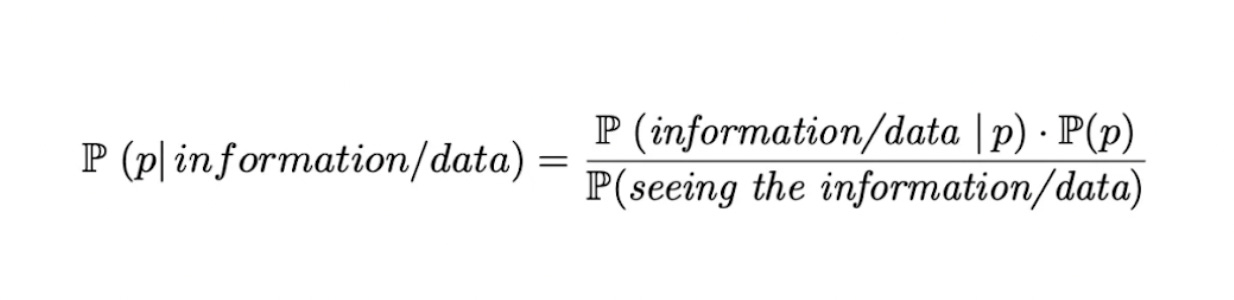

Time for a truly Bayesian interpretation of the problem! Recall from this part of Bayesian thinking & inference, part 1 that Bayes Theorem is the mathematical formula that allows us to move from a prior belief to a posterior one [updated beliefs after observing the data], hence the term “Bayesian”.

The image below illustrates a simplified version of the typical Bayesian workflow. As we progress through this process for our particular NHL-question, we will elaborate on each step and emphasize the key points to keep in mind.

Note: Like for any practical learning or modelling process, numerous aspects need to be considered at each step of the Bayesian workflow. However, since our main goal with this post is to present the core & power of Bayesian methods rather than overwhelm you with details, such issues will be left out of this piece.

Bayes, step 1

Step 1 in the Bayesian workflow is to assign our variable/s of interest a prior distribution. This probability distribution should include the *prior information* we have regarding the variable/parameter. Different people choose different priors, and since priors are an important part of determining our posterior distribution [prior updated with new data], it’s important to get them right. Precision in the prior translates into precision in the posterior [our updated beliefs].

Absent domain knowledge it’s common to assign an uninformative/flat prior to problems one hasn’t investigated before. This has two effects:

Less precision in the output unless you have *a lot* of data.

Decreased risk of obtaining heavily biased estimates due to poor prior beliefs.

For simplicity we’ll be using an uninformative/flat prior, i.e. assume all probabilities between 0 and 1 are equally likely [*obviously* false but the idea of this post isn’t to construct a clever model, it’s to push our readers in the right direction to come up with one themselves]. In reality, a more natural way of approaching the problem would be to run an equivalent model on earlier seasons, a process that would have yielded an “aggregated posterior”. This distribution would then be tweaked with domain specific knowledge [such as beliefs regarding changes to the game that is expected to have an effect on the probability of a team winning a game, conditional on taking a lead into the third period] and subsequently used as a prior for the analysis of the latest season.

Note I: Read a [for some reason popular] terrible take on Twitter last week:

Gambling ads are the absolute worst. It’s far from the only harmful product, but unlike alcohol cigarettes fast food etc, these ads’ purpose is to mind fuck you into believing something that’s false: that you can use your sports knowledge to win money.

Couldn’t agree less. Sports knowledge is what perfects your predictive ability. It is exactly the “tweak” mentioned at the end of the above paragraph that makes the difference between reality and what every data nerd sees. We’re not saying everyone in the markets is doing this fancy “tweak the prior”-Bayes stuff we’re trying to explain here. However, if you have a deep enough look at it & allow for some creativity you’ll realise that what we’re really trying to do with these Bayesian models is to approximate the thought processes we constantly perform in everyday life. A prior belief. New data. An updated belief. Thus, in this language “better sports knowledge” can be translated into “more precise priors”, which of course will have a positive effect on the quality of your/our predictions.

Note II: Sample sizes are a big thing in standard statistics. In Bayes World they don’t matter as much. Why? As you let data hit your Bayesian model, it will perfectly incorporate two things: in which direction the evidence points AND the amount of information/certainty that have been gained from it. If your dataset is small, the posterior will be widely spread [estimates are *uncertain*]. As your dataset grows large, the posterior distribution will converge/put all mass on the true value [estimates become *certain*]. Thus, there’s no point in obsessing about sample sizes since they’ll be naturally embodied in the shape of the posteriors.

Bayes, step 2

Step 2 is to use preexisting mathematical models to approximate the data generating process as well as possible. The data you’re looking at has been generated in some way, right? The mission for you is to find, understand & specify this process!

In our case this is fairly easy since we have an excellent mathematical model for the problem at hand, namely the Binomial model. Each data point is determined in the following way: if, after two periods, one of the teams is in front, we assign the data point the value “TRUE” [or 1] if that team goes on to win the game. Otherwise, it’s assigned “FALSE” [or 0]. The event “TRUE” [1] is assumed to occur with probability p [this is the parameter we’re trying to estimate] while the complement, the team being up at 2/3 of the game yet losing it, occurs with probability 1-p.

Note that if there’s a tie in the game after two periods, we exclude it from our research.

Therefore, after N such games, the number of 1’s is distributed according to a Bin(N, p), a binomial probability model with N = number of games with one team in front after two periods & p = probability of a team winning *given* in front after 2/3. Equivalently, since a Binomial is a sum of Bernoullis, our problem could of course be modeled as a sum of N Bernoulli(p) random variables as well.

Bayes, step 3

In step 3 our mission is to code up a Bayesian algorithm that has the ability to learn from the data we’re feeding it. To do this, we’ll be using PyMC, a probabilistic programming language in Python.

PyMC is a probabilistic programming library for Python that allows users to build Bayesian models with a simple Python API and fit them using Markov chain Monte Carlo (MCMC) methods.

For now, don’t worry about the intricacies of PyMC [autism overload]. Bayesian models become mathematically intractable *extremely* fast [they’re terrible to work with using “pen & paper”-methods] & MCMC is simply a class of clever algorithms that despite this helps us find [more specifically: efficiently sample from] the posterior distributions.

A simple

pip install pymcwill install the library & its dependencies.

Next, we initiate a Python program where our modelling will take place by importing PyMC & arviz [visualization library for Bayesian models].

import pymc as pm

import arviz as azAfter loading the data,

# load the data, code assumes NHLData.xlsx exists in the same directory

nhldata_df = pd.read_excel("NHLData.xlsx")

case_data_uncleaned = nhldata_df.Case.to_numpy()

case_data = case_data_uncleaned[~np.isnan(case_data_uncleaned)]

number_of_ones = int(sum(case_data))

number_of_games = len(case_data)it’s time to create our model of how the data was generated.

Create a PyMC model object.

nhl_bayes_model = pm.Model()Within the context of the model, define priors [in our case only one, a uniform for p [recall, p is the probability of a team winning, *given* in front after 2/3] where we assume each value between 0 and 1 to be equally likely] and construct a representation of the data generating process.

# prior for p p = pm.Uniform(name = "p", lower = 0, upper = 1) # given values of p & N, what's the probability of seeing K games satisfying our criterion? this is the data generating process likelihood = pm.Binomial(name = "likelihood", p = p, n = number_of_games)Tell PyMC that we’ve observed data for the Binomial random variable [the number of games where the team in front kept the lead & won the game] by adding an extra parameter [observed = number_of_ones] to the “likelihood” variable above.

likelihood = pm.Binomial(name = "likelihood", p = p, n = number_of_games, observed = number_of_ones)

Using this code, PyMC will now understand that it should run comparisons for all possible p's between 0 and 1 to try to understand which one of those values is most likely [or, more specifically, exactly how likely each such p is] to have generated 836 successes in 1 008 datapoints [our observed value].

As you feed a Bayesian model data, it will learn by updating/reweighting the current, prior distribution according to what’s most likely to have generated the observed data.

Well documented code for this example is available here.

Bayes, step 4

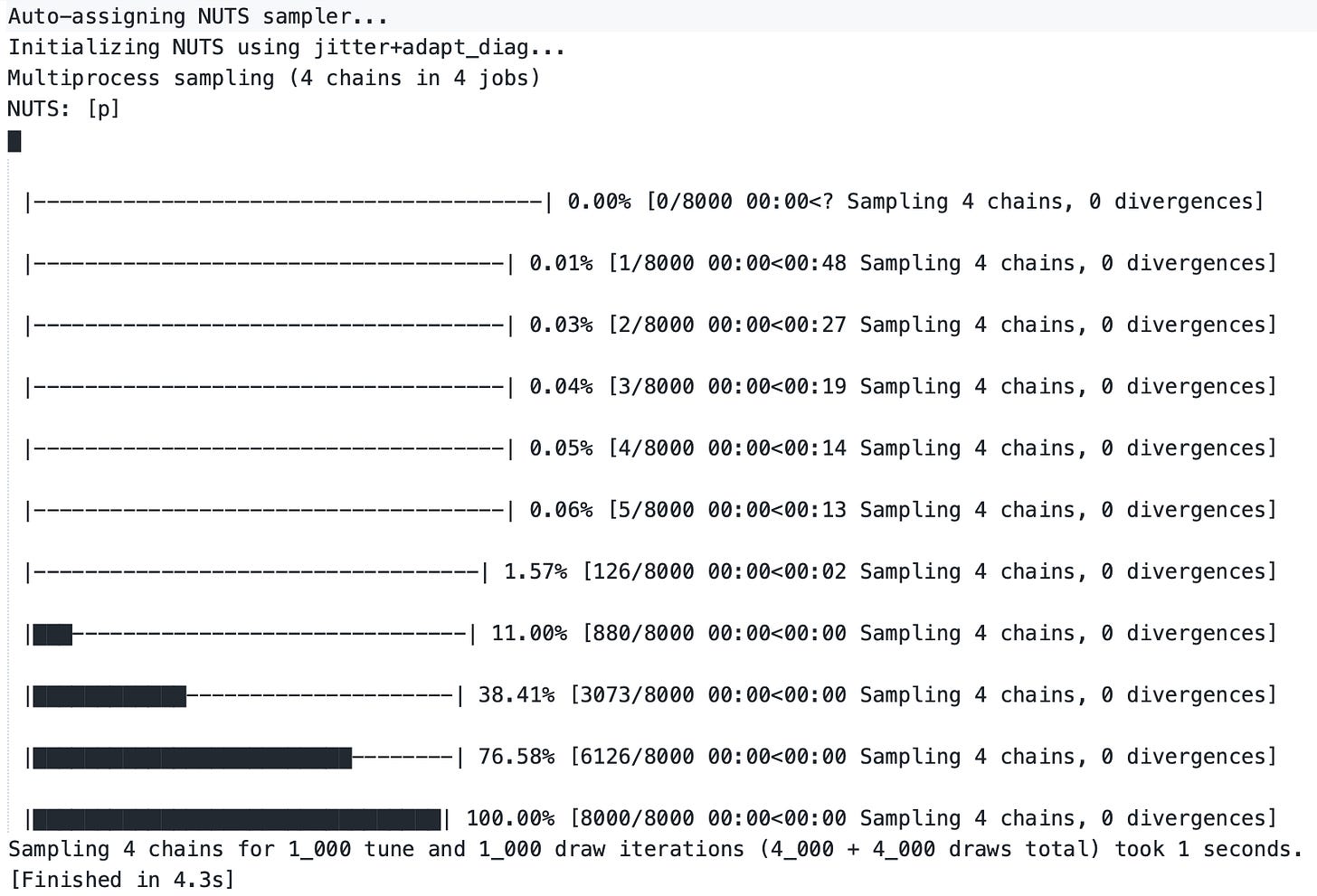

Finally, it’s time to run our model & let PyMC help us update the prior distribution by incorporating the new data. This is generally the point at which the Markov Chain Monte Carlo stuff comes into play. For now we’ll simply consider this a black box thing and instead focus on

*the output*, a close approximation of the posterior distribution, i.e. the prior beliefs updated with whatever information was gained from the observed data.

To actually run the code that produces this posterior distribution we’ll have to add an extra line within the PyMC model context.

inference_data = pm.sample()This tells PyMC to sample from the posterior distribution [1 000 samples by default] and generate an InferenceData object with the output. The InferenceData object offers multiple methods that allow for further analysis.

Running it yields the below output.

Bayes, step 5

Time to analyze what Bayes has taught us! Our output is currently stored in the inference_data variable/object which contains a ton of information. However, since:

We’re primarily interested in a basic level understanding of what the data says,

A plot is worth a thousand words,

we’ll limit ourselves to plotting the posterior distribution & investigate and draw conclusions from it from a visual point of view.

This can be done using

pm.plot_posterior(inference_data, show = True)Result below.

Interpretations, comparisons, remarks

The posterior distribution puts most of the probability around ~0.83 which makes sense since this coincides with the observed fraction in our dataset. Note however that there are no claims whatsoever of this being “the correct” fraction. Instead, it presents a clear picture of the remaining uncertainty by providing *a distribution* of plausible values of p.

Given our uniform prior & that our data generating process is a fair represenation of reality, we can be almost certain that the true probability of a team winning a game, conditional on taking a lead into the third period, lies somewhere in the range [0.80, 0.86]. Thus we’ve managed to answer our original question.

With another prior, we’d have seen a different posterior. For instance, if we play around a little bit and assume the true probability to in fact be 0.82 [and be stable over different NHL seasons], we’d expect to see more certainty [narrower distribution] as well as a horizontal shift towards 0.82 if we were to repeat this analysis after the upcoming season, with *this* posterior used as that season’s prior.

Remark: Now that we’ve learnt a lot about the average, most generic probability for this kind of question, we’d perhaps like to condition on some factors to nail down more specific probabilities. For example, what about an underdog against a favourite being up exactly one goal after the first two periods?

Remember: subcases like this offer less data. Therefore, if you’ve been inspired by this post and are planning to continue your betting journey by analyzing similar subcases on your own, you better sharpen your priors. If you’re not willing to go several levels down the ‘trees’ [splitting datasets on multiple variables & constantly re-performing the analysis on those mini-problems], then you’ll for sure miss out on the truly interesting aspects of your dataset. All problems are small data problems. Or, perhaps more accurately, all the GMI ones are.

Conclusion

This was a rather lengthy post with a fair bit of advanced content to digest & process. As mentioned earlier, take your time with the material. Read. Think. Read again. Or, similarly: Have a belief. Read. Update your belief.

Next up on the BowTiedBettor Substack is a Q&A.

On another note: currently in the midst of a BowTiedBettor project that will launch later this year. How about a real time data feed containing every single one of the latest *informative* odds drops?

Stay toon’d!

Until next time…

Disclaimer: None of this is to be deemed legal or financial advice of any kind. These are *opinions* written by an anonymous group of mathematicians who moved into betting.