Do you have a friend who likes betting and would like to earn some risk-free money? Help them on their path by sharing the BowTiedBettor Substack. Win and help win!

Welcome Degen Gambler!

Previously we have covered two concepts which at first glance do not seem related in any way, web scraping and conditional probability.

Having learnt about the two ideas, one may do the following observations:

Web scraping is useless unless the data you collect is supposed to serve some purpose.

Mathematical formulas (in this case Bayes Theorem) cannot be expected to produce any valuable output without relevant input.

Therefore, since both web scraping and mathematics are insufficient on their own, one might instead hope to *combine them* in a way that generates something of value. Indeed, as we will see in this and the coming posts of this series, by cleverly tying the data collection and the mathematics together, it is possible to construct an excellent framework for learning from data/information, something that is known as bayesian inference*.

Note: In math, 1+1 = 2. In life, 1+1 is often greater than 2. This is an (somewhat autistic) example.

*Inference = learning from data. Geeks would probably involve esoteric terminology to explain the word, we prefer understandable explanations.

Since there is a lot to digest here, the introduction to the subject will be split into (at least) two posts. Today we will begin by gaining some intuition (probably confusion/brain damage as well, probability theory is *hard*) for bayesian thinking and then we will be back with practical applications of the theory to real world data in part 2.

Lack of information

Well, to fully appreciate the beauty of bayesian inference one must first be in a creative, bayesian mood. Therefore we’ll begin with an interesting horse racing example to introduce the bayesian way of viewing the world.

Suppose that there is a race on New Year’s Eve and that your mission is to figure out the win probabilities for all the competing horses. Furthermore, assume that at this point in time you have no information whatsoever regarding the race. Basically, all you know is that there *is* a race. Now, the question is, what is your initial set of probabilities for the race?

Indeed a very strange question, but if we make an attempt at answering it, we can probably begin by agreeing on the following very obvious fact: for a race to take place, at least one horse has to participate. Therefore, let’s zoom in on one (possibly the only one) of the participating horses. If we can estimate the probability for *this* horse winning the race, then, after having done so, we may simply repeat the same procedure with the rest of the horses and thereby find the full set of win probabilities.

Now, we immediately encounter a problem. Since we do not even know the number of competing horses, it’s obviously impossible to narrow our win probability (for the given horse) down to a *single* number. The reason is simple, we lack severe amounts of information.

Nevertheless, let’s continue analyzing the situation. By one of the fundamental axioms of probability we know that the probability must lie between 0 and 1. Furthermore, since horse races commonly contain 8-15 horses we somehow feel that the most plausible values should lie in the range [0.08, 0.12]. However, as mentioned above, we most definitely cannot state *with certainty* that the probability of the horse winning the race is, say, 0.13. Thus, even though we ‘feel like’ we know a little bit about it, we clearly don’t know enough. The lack of enough information makes *the probability itself* uncertain. And this uncertainty that we are now observing in the win probability is the whole point of this opening discussion.

In a world without complete information, probabilities themselves are uncertain

- BowTiedBettor

Bayesian thinking

Enter bayesian thinking. Instead of either considering the problem ‘stupid’ or forcing ourselves to agree on some arbitrary number, why not incorporate the uncertainty we are observing into some kind of model? Normally, when we are uncertain about things, we consult probability theory and model the uncertainty using the tools it offers us. Why wouldn’t we do the same in this case?

Accordingly, we describe the uncertainty using a probability distribution over all possible values (the interval [0, 1]) of p, where p is the probability of the horse winning the race. We construct a probability of the probability. Integrating the knowledge mentioned previously (… horse races commonly contain 8-15 horses we somehow feel that, given no other information, that the most plausible values should lie in the range [0.08, 0.12]) into our model, a reasonable representation of our initial distribution of the probability looks something like below.

Autist note: Turbos will recognize this process as the design of a prior. For now this detail is not of much importance to us.

Note: The function representing how the probability is distributed (the blue graph in the picture) is called a probability density function [PDF] in probability theory.

Having built this foundation, we can now continue with this approach to move closer to and hopefully approach reality (after all, in practice we will not make much money modeling races we know nothing about). Taking the above graph as the representation of our initial belief, we now await more data, and as soon as we receive new bits of information, we simply update our current probability distribution (of the win probability) according to what the new information tells us. For example, if later this week the entries are revealed and we’re examining the (now known to be) best horse in the field, then naturally the distribution should be shifted towards higher values of p (why?).

Note: As new information emerges, P(p) must be mapped to P(p|new information).

What however is more subtle is the other thing that should happen to the distribution: the ‘spread’ of it should *decrease*. Why? Remember that the distribution/graph is a measure of how much we know about the probability of the horse winning the race. If for some reason we were certain (think of a coin toss where we *know* that the coin is unbiased) that the probability was 0.5, we would basically put all the mass at that point. And since we’ve just obtained more information, which by assumption is valuable information, we now know more about the race and should therefore be able to estimate the probability with increased accuracy.

If we continue iterating this process until post time, we’ll continually update our distribution of the probability, hopefully with increased precision for each new piece of information. Simultaneously, since we at each step *learn more* of the underlying process (the race), the distribution/graph should concentrate around a smaller and smaller subset (unless there is inherent randomness, as in a coin flip example we’ll soon familiarize ourselves with) of the [0, 1] interval, providing us with increasingly sophisticated estimations of what the correct probability (and odds) is. In particular, and this is important, new information will have less impact on our estimates, which is similar to saying that we’ve already been able to come pretty close to the truth.

An example of the evolution of the process in the pictures below.

Note: ‘Information’ is a very broad term. For instance, as in the example in the graphs above, the way other bettors price a race could most definitely be used to sharpen your predictions. This agrees with the common sense that if a bookie/bettor possesses more information than you do, their actions should be considered valuable information and therefore make you update your current estimations of the probabilities *prior to* placing any bets. On the other hand, when you’ve reached a high degree of certainty in your modeling and a not-so-sharp bookie [doesn’t force you to update anything since their information is not information (your size is not size etc…) + you’re already fairly certain about your estimates] comes along and offers a +EV bet, you hit it without even thinking.

The perfect world, the real world

In a perfect world where we would reach complete (probability-wise, not in the sense of knowing the result in advance, we’re not gods) information at post-time, the whole distribution would contract/converge to a single point, and there would be no remaining uncertainty regarding the probability of the horse winning the race. In fact, even if there was some kind of maximum information (as in the example below) one could extract from the process, and we managed to describe the remaining randomness flawlessly, this would still suffice to compute the correct win probabilities (proof + intuition below) of the participants.

Example: If you have a bag with two coins, one biased (75-25) and one unbiased (50-50), and pick a coin at random, you may compute the probability of heads by considering the probability distribution [p = 0.75 with prob 0.5, p = 0.5 with prob 0.5] of this probability. In some sense you know everything there is to know (can calculate correct probabilities/odds for a bet on heads), even though you cannot state for certain what the true probability is when the coinflip takes place.

In practice, we never reach neither complete nor maximum information, so we’re always left with some kind of probability density function describing the remaining amount of information and/or randomness. If we assume that the density is a fairly good approximation of reality, then a simple mathematical theorem, which in fact have been used already (in the coin flip case), lets us compute *our estimate* of the true probability.

Observe that we by assumption have complete knowledge of:

The probability that the horse’s winning probability attains some specific value (e.g. 0.14).

The probability that the horse in fact wins the race, given a certain winning probability (of course equal to the given winning probability).

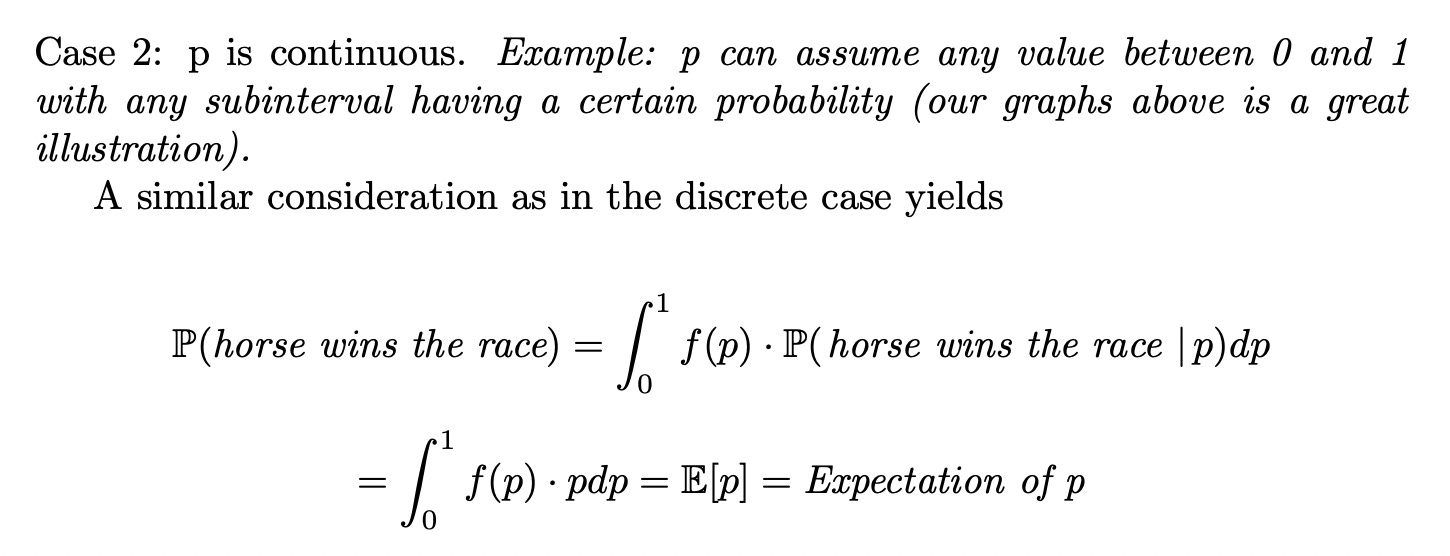

Therefore, to compute the true/real world win probability, we can loop over all possible values of p and weigh these p’s with the probability that they are attained, which is completely described by our density/graph/distribution. An experienced observer will quickly conjecture that this operation is equivalent to computing the expected value [EV] of p over its specified probability distribution. Indeed (verified by the below math) this is true.

This method of looping over all potential values of p (0.75 and 0.5) was exactly the method used to find the probability of heads in the earlier bag + coin flip example. In that case the two possible values of p was 0.75 and 0.5, and the probabilities of seeing them 0.5 and 0.5 respectively. Therefore, it immediately followed that the probability of heads was determined by 0.5*0.75 + 0.5*0.5 = 0.625.

Why ‘bayesian’?

The necessary building blocks of bayesian thinking have now been presented and consequently we are ready to go full autism by delving deep into bayesian inference. However, to clear come confusion, what is this ‘bayesian’ thing that keeps popping up? You’ve heard of Bayes & Bayes Theorem, but so far… no sight of him! Why is the stuff called ‘bayesian’?

Well, in the above quite abstract discussion there was a very fundamental notion that was left more or less untouched. How are we supposed to actually move from the prior distribution (pre-information) to the posterior distribution (post-information)? Our current extremely naive method of guessing and drawing some graphs does not take us far, and there is no doubt that a better procedure must be developed.

BAYES!

Bayes formula is, as you should know at this point, an equation which offers the possibility to calculate a conditional probability of something given something else. Handy, isn’t it, since our whole mission is to compute the probability of something (e.g. the probability of a horse winning a race), given data/information. Thus, if we combine the earlier reasoning with Bayes formula, we naturally arrive at bayesian inference.

Conclusion

This post is already pretty dense at this point so to save you some brains for the rest of the night we will save the practical applications for the next post in this bayesian series. Nevertheless, to give you a hint of what is coming we wrap up today’s post by stating two interesting problems that can be handled perfectly by the reasoning we have just developed:

You have data on your last 473 bets (e.g. testing a strategy/tailing another bettor) and would like to examine whether you/they have an edge or not. Update: This problem was handled in Bet sequences, an analysis.

After having read our posts on web scraping, you have just scraped and stored all the NHL data from the previous season. You are now interested in learning as much as possible about it. For example, since you find live odds intriguing, you wonder what the probability of a team winning a game, conditional on taking a lead into the third period, is.

Try to figure out how one could answer the above questions, anon.

Then, continue with part two of this Bayesian series!

Until next time…

Great post and thanks for this Substack.

Can you help explain how in a race with a range of 8-15 horses we should expect probabilities in the range of [0.08, .12]?