Build your first odds scraper

Level 5 - SHARP

Welcome Avatar!

Today we will be building our first basic odds scraper. We will be using Python only, therefore it is more or less a prerequisite that you have some elementary Python knowledge if you expect to learn something from this overview. However, if you only have familiarity with other languages or even lack programming experience, we will make sure to provide links to different Youtube guides throughout the guide to outline the steps that need to be taken for you to later on be able to follow and make use of this post.

Step 1: Find something you would like to scrape

Suppose we are betting the MLB and for some reason (observing market trends, interested in knowing *when* prices move) would like to scrape the Unibet odds of a night’s games once an hour during the preceding afternoon.

How do we proceed?

Step 2: Download necessary modules and programs

There are a few options here but in this guide we have chosen to go with Selenium. After having installed Python (guide here) and a text editor of your choice (we are using Sublime Text, great guide here), you will need to download Selenium. This is easiest done by running (Mac, Linux)

pip install selenium

or

pip3 install selenium in terminal or if you are using Windows you run

py -m pip install selenium in the command line. For a youtube guide on pip, see this video.

Furthermore, to use Selenium you will need to download Chromedriver, an open source tool for automated testing of webapps across many browsers. This is simply done by visiting this site and downloading the version matching your current Chrome version (you find this by clicking the three dots in the upper right corner → Help → About Google Chrome).

When all the installations have been completed, we are now ready to initialize the scraping process.

Autist note: In terms of speed, usage of Selenium is *very slow* compared to alternative strategies such as fetching the data straight from the API the website itself is obtaining its numbers from, hence if one is aiming for speed optimization there are much better approaches than the style used in this substack post.

Step 3: Initiate a selenium/webdriver session in your script

We now begin the construction of our script by importing the relevant modules and setting up a Selenium session. To simplify matters, we will be using manual time waits and hence it is a good idea to import the time module (should come preinstalled with the installation of Python) as well.

"""

Import relevant modules

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

"""

Set up a selenium/webdriver session

"""

# Find chromedriver on your computer, copy full path and paste here

path = "PATH_TO_CHROMEDRIVER"

driver = webdriver.Chrome(path) Step 4: Open the website through webdriver

Now that our webdriver session is up and running, we want to visit the website of interest and begin our analysis of the HTML-code to locate the information we would like to extract. A quick Google/Unibet search discovers this landing page where Unibet lists all odds on today’s MLB games. We complement our script with a get request and run it to arrive at the page.

Note: You do want to visit the page via Selenium since you are then assured you are seeing the same things as the driver object you are informing. If you are a frequent visitor to the website you are scraping it is very likely you are not seeing the same exact material a new, fresh visitor would.

"""

Import relevant modules

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

"""

Set up a selenium/webdriver session

"""

# Find chromedriver on your computer, copy full path and paste here

path = "PATH_TO_CHROMEDRIVER"

driver = webdriver.Chrome(path)

"""

Call the relevant website

"""

driver.get("https://www.unibet.com/betting/sports/filter/baseball/mlb/all/matches") After running this script the Unibet landing page should open in an automated Chrome session on your computer. It is now time to find the data in the HTML-code.

Step 5: Search the HTML-code for your data

The Wikipedia page and this youtube video describes HTML and how to locate elements comprehensively, however for our purposes we will not need to understand more than the absolute basics.

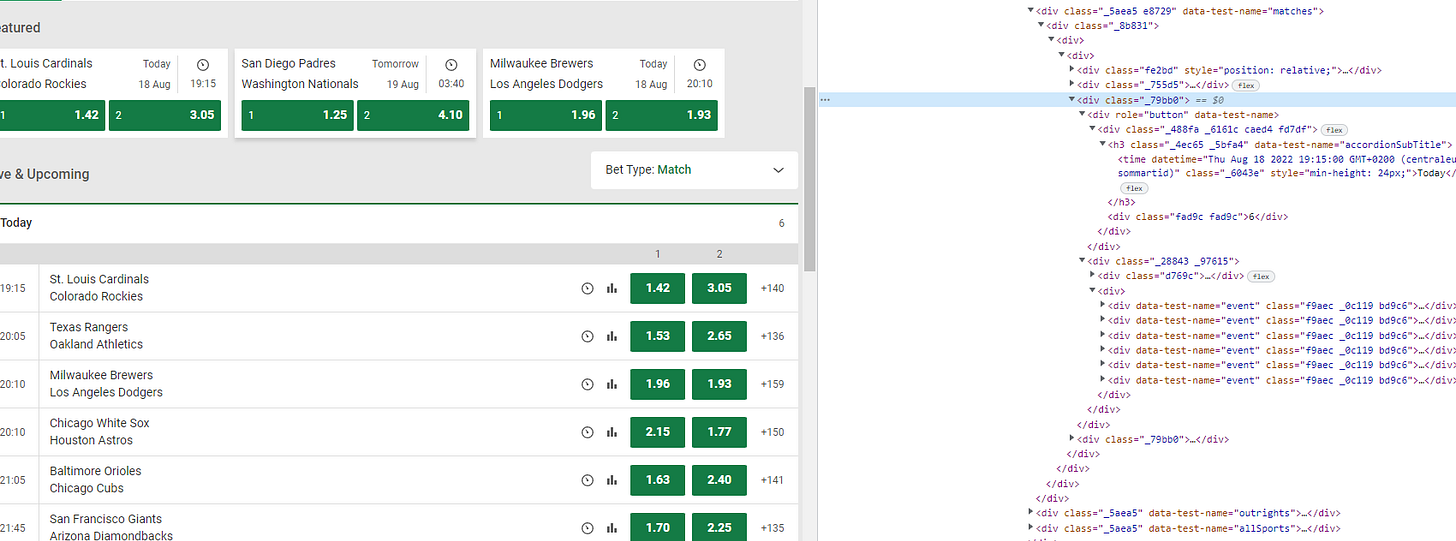

Right clicking on ‘Today’ followed by inspecting elements opens the following HTML-code in our browser.

We focus on the <div> containing the whole segment corresponding to ‘Today’. By rolling our mouse over a few lines we note that this is the <div> with class name “_79bb0”. Thus we instruct our driver object to, after adding a manual time wait to make sure the page has been loaded correctly, find this element and store it as a variable. This leaves us with the below code.

"""

Import relevant modules

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

"""

Set up a selenium/webdriver session

"""

# Find chromedriver on your computer, copy full path and paste here

path = "PATH_TO_CHROMEDRIVER"

driver = webdriver.Chrome(path)

"""

Call the relevant website

"""

driver.get("https://www.unibet.com/betting/sports/filter/baseball/mlb/all/matches")

time.sleep(1)

"""

Find the 'Today' element and store it

"""

today_object = driver.find_element(By.CLASS_NAME, "_79bb0")Autist note: This search could for sure make use of a number of improvements to ascertain the right information is found at all times, however this text is primarily meant for illustrative purposes.

Step 6: Loop through all the games and print the odds

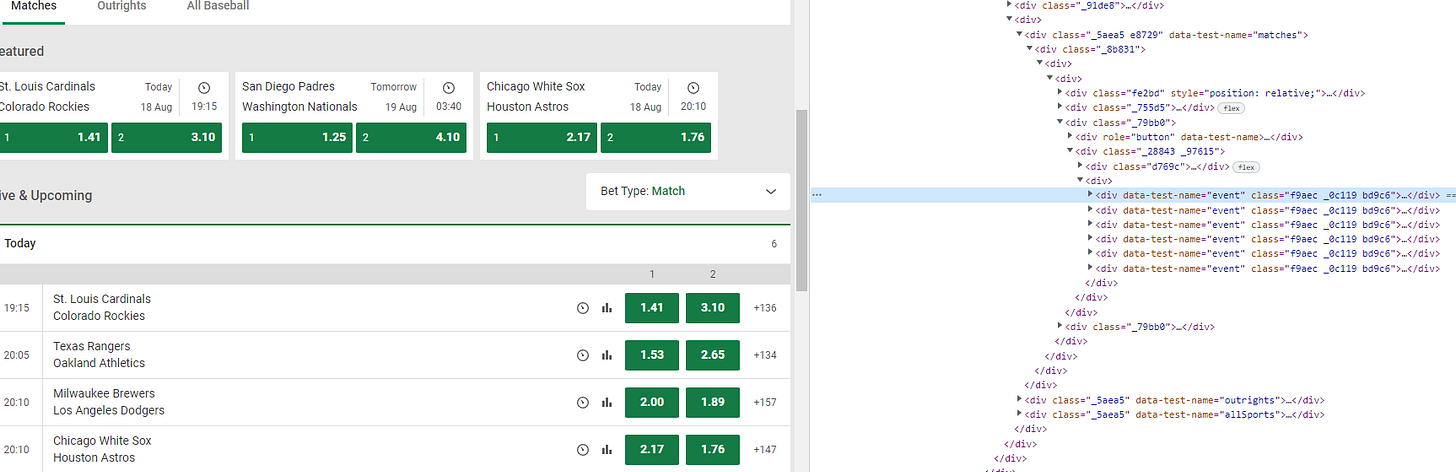

The today_object now contains all the necessary information for our mission. To extract the names of the teams as well as the odds for all games, we need to find all elements representing a game and then loop them through one game at a time and print out the data we are looking for in every such game. We find, again by inspecting the HTML-code, that the class name representing a unique game is “f9aec _0c119 bd9c6”.

In summary, the goals of the next code snippet are to:

Search through the today_object and find all the games.

Create a list and store the games in the list.

which is easily performed by the following code:

"""

Find all the games and store them in a list

"""

games = today_object.find_elements(By.CLASS_NAME, "f9aec._0c119.bd9c6")Note that we have replaced the blankspaces in the class name with dots to make sure the Selenium methods work as intended.

Now it is time to loop through the games and print out the name of the teams and their moneyline odds. By using a similar approach as before, we arrive at the below code which more or less completes our script.

for game in games:

team_names = game.find_elements(By.CLASS_NAME, "_6548b")

team_names_list = []

for team in team_names:

team_names_list.append(team.get_attribute("textContent"))

home_name = team_names_list[0]

away_name = team_names_list[1]

odds = game.find_element(By.CLASS_NAME, "bb419")

home_odds = odds.get_attribute("textContent")[0:4]

away_odds = odds.get_attribute("textContent")[4:8]

print(home_name, home_odds)

print(away_name, away_odds)

Pulling everything together and adding a driver.quit() at the end of it, we have the following, fully working, fully usable script.

"""

Import relevant modules

"""

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

"""

Set up a selenium/webdriver session

"""

# Find chromedriver on your computer, copy full path and paste here

path = "PATH_TO_CHROMEDRIVER"

driver = webdriver.Chrome(path)

"""

Call the relevant website

"""

driver.get("https://www.unibet.com/betting/sports/filter/baseball/mlb/all/matches")

time.sleep(1)

"""

Find the 'Today' element and store it

"""

today_object = driver.find_element(By.CLASS_NAME, "_79bb0")

time.sleep(1)

"""

Find all the games and store them in a list

"""

games = today_object.find_elements(By.CLASS_NAME, "f9aec._0c119.bd9c6")

"""

Loops through the games and prints the relevant data

"""

for game in games:

team_names = game.find_elements(By.CLASS_NAME, "_6548b")

team_names_list = []

for team in team_names:

team_names_list.append(team.get_attribute("textContent"))

home_name = team_names_list[0]

away_name = team_names_list[1]

odds = game.find_element(By.CLASS_NAME, "bb419")

home_odds = odds.get_attribute("textContent")[0:4]

away_odds = odds.get_attribute("textContent")[4:8]

print(home_name, home_odds)

print(away_name, away_odds)

print("")

driver.quit()

When run, the following output is produced:

St. Louis Cardinals 1.41

Colorado Rockies 3.10

Texas Rangers 1.53

Oakland Athletics 2.65

Milwaukee Brewers 2.00

Los Angeles Dodgers 1.89

Chicago White Sox 2.17

Houston Astros 1.76

Baltimore Orioles 1.63

Chicago Cubs 2.40

San Francisco Giants 1.70

Arizona Diamondbacks 2.25Step 7: Use the data

Now that the script is complete, you simply run it and then do whatever you might want to do with the data. There are a myriad of ways to analyze it, use it, store it et cetera, it is all up to your imagination.

Autist note: If you would like to run an odds scraper, say, once an hour every afternoon as mentioned in Step 1, you could do this by utilizing a time-based schedule manager, e.g. cron. If you are planning to obtain and use real-time data continuously throughout the day, Selenium will probably be too slow for your purposes and you should instead research things like API-usage, requests (python module) and WebSocket.

Until next time…

I do not know how to code (unfortunately) anyone in the bowtied community willing to build this for me? I primarily bet horses and would like a system built for that. @bowtiedbettor, is a scraper useful for horse betting?