Do you have a friend who likes betting and would like to sharpen his/her action? Help them on their path by sharing the BowTiedBettor Substack. Win and help win!

Welcome Avatar!

Today we are back with the second part of the DraftKings web scraping project. In case you haven’t read part 1 yet, head straight to DraftKings web scraping project - Part 1/3 and begin there.

First, we begin by recalling what was done in part 1:

A DraftKings class was constructed for the purpose of holding all our useful scraping methods.

Two scraping methods were created, the rather lengthy get_pregame_odds [which is used for scraping pregame markets] and the more concise store_as_json which allows us to dump any scraped pregame content into a local file.

Basic tests for the pregame scraper were performed and everything looked good [unless there’s money on the line, only the virgins care about proper testing procedures].

Since the above three bullet points sum up and confirm the completion of the first part of the project, the pregame scraper, it’s time to take up the next mission, the live odds scraping/streaming.

Background information

A warning: from here on the autism intensifies quite a bit. If you at times feel discouraged while digesting the content and the explanations, you’re definitely not alone. Keep going!

A natural start is to discuss and install the necessary dependencies. DraftKings uses the WebSocket protocol for their live odds data and in order for us to interact with it we’ll need two Python libraries, websockets and asyncio. websockets is built on top of asyncio, hence the need for the latter.

WebSocket is a communication protocol that allows two-way, realtime communication between clients and servers over the internet. WebSocket communication works by establishing a persistent connection (as opposed to the HTTP request-response structure) between a client and a server, allowing for seamless data transmission in both directions. This makes the protocol ideal for applications that require fast and efficient communication, such as live betting.

What’s the advantage of using the WebSocket protocol to handle live odds updates instead of the standard http response & request framework? It’s, as always, best described by an example!

Suppose BowTiedBettor wants to begin selling bet picks to his 500 subscribers and that there are two different “betting floor architectures” for him to choose between.

Alternative number one is for him to sit inside a small office. Each time one of his subscribers wonders about new bets, they open the door and enter the office [set up a connection]. Then, they get to ask him “hey, do you have any new bets to share?” [send a request], which he answers with either “yes, bet X on X”, or “no, not currently” [receive a response]. The moment they’ve received the response, they exit the office [close the connection]. This is the HTTP protocol.

Alternative number two is for him to buy a huge “betting floor”, allowing *all* of his subscribers, after a quick verification [WS “handshake”, set up a connection], in at once. Now, instead of they having to ask him the same questions over and over again [in many cases for no reason since zero new bet opportunities have arisen], they instead stay silent and *await* his information. The second BowTiedBettor locates a +EV opportunity, he stands up and shouts “BET X ON X, KELLY SAYS 0.9 % OF YOUR PORTFOLIO”. All 500 subscribers receive the information immediately and hit the bookies in tandem. Two weeks later the bookies are zeros. This is the WebSockets protocol.

More formally:

Reduced latency: With websockets, there is only one connection established between the client and the server. This reduces the latency compared to HTTP, where a new connection needs to be established for each request-response cycle.

Less overhead: In websockets, the overhead is reduced as the connection remains open, while in HTTP, the overhead increases with each request-response cycle.

[Subscribers already in the same room, and staying there in between new events]

Efficient use of resources: With websockets, the server can push data to the client at any time, making it an efficient use of server resources compared to HTTP, where the server only responds to client requests.

[Subscribers receive the information as soon as BowTiedBettor shouts, don’t have to enter his office and ask]

A brief description of the asyncio library as well below. For our purposes it’ll be enough to know *the absolute basics* here [trust us on this, we know more or less nothing about it].

asyncio is a library in Python for writing asynchronous, non-blocking code. It provides an efficient way to run multiple tasks simultaneously and handle network and I/O-bound programs, making it a popular choice for developing high-performance and concurrent applications. This helps to prevent your program from getting stuck while waiting for a task to complete, allowing you to maximize the use of system resources.

Want to know more about asyncio & websockets? See this post by

and combine it with this walkthrough of asyncio.Note: We’ll be using Firefox throughout this project and recommend you do the same. The reason: its built-in JSON-parser & great web dev tools simplifies the scraping process tremendously.

The installations of the required packages are easily performed by running

pip install websockets asyncioin your terminal.

Live odds scraping

Okay, let’s get to it!

Note: Integrating code with Substack posts hasn’t really been optimized yet. If you prefer, you could visit our DraftKings repo and follow along by having the code open in another tab instead (*definitely* recommended).

Step 1 is of course to visit DraftKings and more particularly, their live odds page.

As of the time of this writing, there are two ongoing NBA games, WAS Wizards @ BKN Nets and LA Lakers @ NO Pelicans. Thus we’ll be scraping the NBA today.

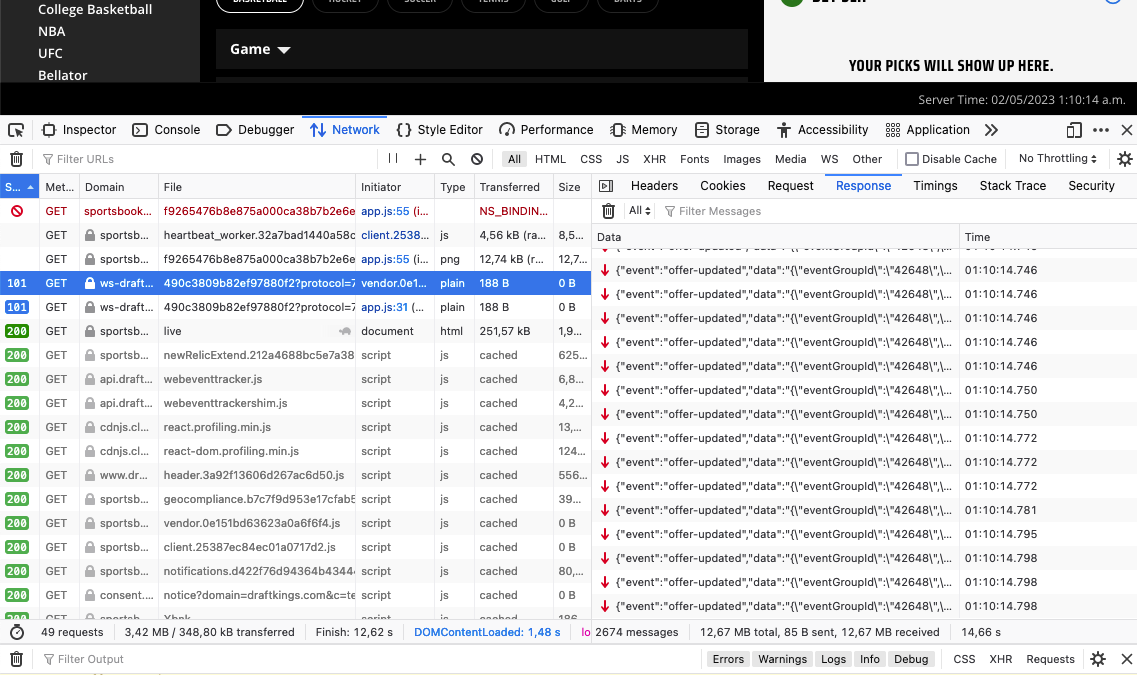

As before, right click → inspect to arrive at the Firefox web dev tools. Visit the ‘Network’ tab. To find the relevant websocket, sort on ‘Status’ and click on one of the 101 ones. Click on ‘Response’ on your right and if you’ve found the right one you should see a constantly updating stream of messages going back and forth between you [your web browser is sending and collecting all the messages on your behalf] and the DraftKings server.

Green arrow: message from you to the server.

Red arrow: message from the server to you.

The HTTP 101 Switching Protocols response code indicates a protocol to which the server switches. The protocol is specified in the Upgrade request header received from a client.

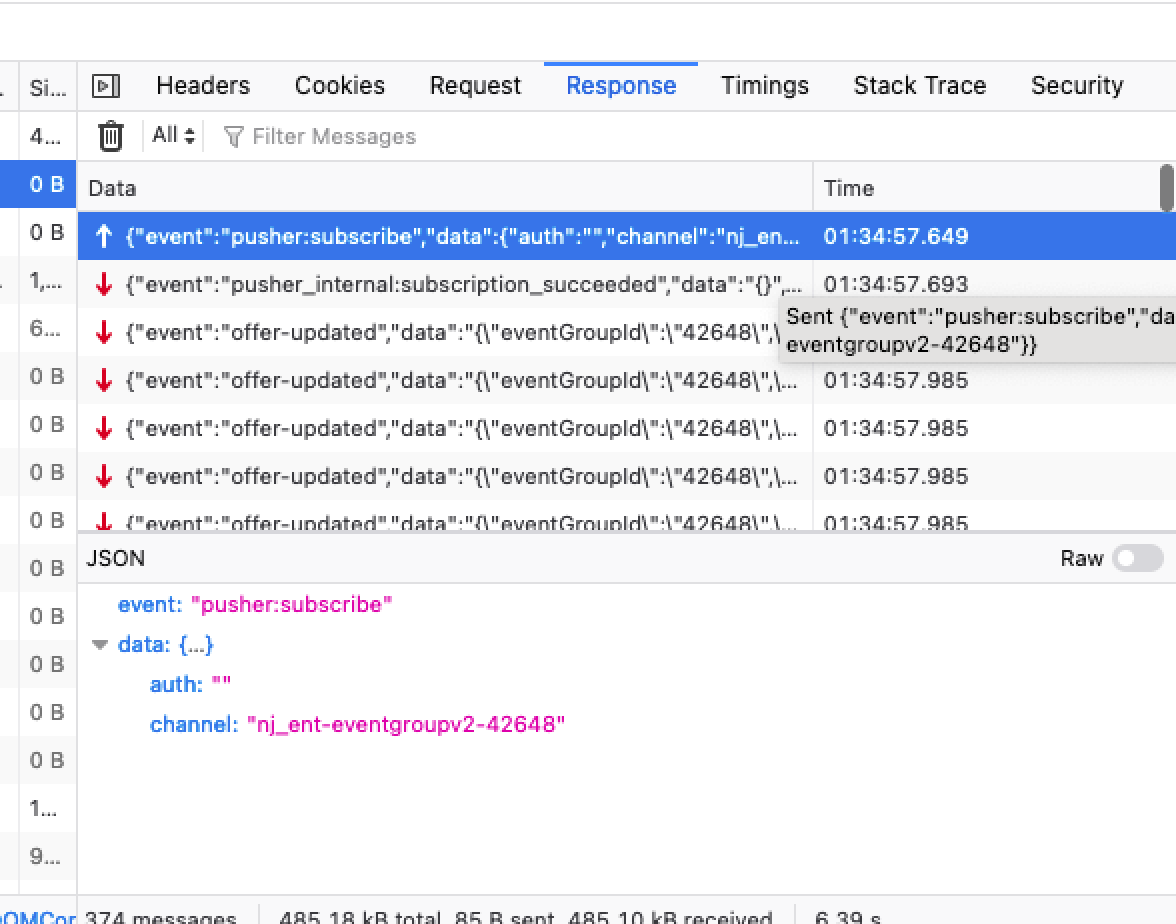

Scroll to the top to find the first message sent by your web browser to the server. This is an important message, since it’s the one we’ll try to imitate. It should be something like the below.

In the message, the browser tells the server that we want to subscribe to all information updates related to the games with eventGroupId 42648, which, as we know from before [part 1 discussed how to find the eventGroupId for a specific league], is the id for the NBA.

Click ‘Raw’ on the right and copy the JSON-content.

Now, to establish a similar connection with the server ourselves, we create a new Python file [draftkings_stream.py] and define a function that will connect, subscribe and await new messages. Since we’re using the websockets library [built on top of asyncio] our function has to be asynchronous, a fact we inform Python about by using the keyword async in the construction of the function. A basic code block that defines a function which sets up the connection, awaits the first message and prints it, then closes the connection again, below.

import asyncio

import websockets

import json

from traceback import print_exc

async def stream(uri: str, league_id: str):

"""

uri is the one you see in the web dev tools

league_id is e.g. 42648 for the NBA

"""

try:

# connects to the server

ws = await websockets.connect(uri)

# sends a subscription message with info regarding the odds data we want to receive updates on

await ws.send(

json.dumps({"event": "pusher:subscribe", "data": {"auth": "", "channel": f"nj_ent-eventgroupv2-{league_id}"}}

)

)

new_message = await ws.recv()

print(new_message)

# closes the connection with the server

await ws.close()

except:

print_exc()Running it seems to work just fine with both the connection and the subscription functioning as intended.

To allow for a continuous stream of messages and to handle each and every one of them properly, we expand the functionality of our code by adding a decent chunk of new properties to our function. Code below or on Github. A full description of the function is provided immediately after the code block.

import asyncio

import websockets

import json

from traceback import print_exc

async def stream(uri: str, league_id: str, event_ids: list, markets: list):

"""

Sets up a connection with the server which is pushing new odds to the DraftKings website.

Awaits further updates. As soon as updated odds information arrives it prints all the relevant

information regarding the specific odds update.

The function is meant to be called from the live_odds_stream method inside the DraftKings class

:param uri str: URI for the web server

:param league_id str: Id for the league

:param timeout int: Number of seconds for the stream to keep on going

:param event_id list: If a list of event_ids is specified [else it's None], the stream/listener considers updates

only if they're updates for those particular games

:param markets list: If a list of markets is specified [else markets == None], the stream/listener considers updates

only if they're updates for those particular markets

Hint: If uncertain about market names, run it for a minute for all markets and collect the correct

names of the markets this way

:rtype: Returns nothing, continues collecting and presenting new odds info until stopped

"""

try:

# connects to the web server

ws = await websockets.connect(uri)

# sends a subscription message to the server with info regarding the odds data we want to receive updates on

await ws.send(

json.dumps({"event": "pusher:subscribe", "data": {"auth": "", "channel": f"nj_ent-eventgroupv2-{league_id}"}}

)

)

while True:

try:

new_message = await ws.recv()

message_json = json.loads(new_message)

event = message_json['event']

if not event == "offer-updated":

if event == 'pusher:connection_established':

print("Connection established!")

continue

elif event == 'pusher_internal:subscription_succeeded':

print("Subscription succeeded, awaits new odds updates...")

continue

else:

# print(f"Update received but for the wrong WS event type: {event}")

continue

content = json.loads(message_json['data'])

event_id = content['data'][0]['eventId']

if event_ids:

# if a list of event_ids is provided, this checks whether

# the update is relevant or not

if not event_id in event_ids:

# print(f"Update received but for the wrong event_id: {event_id}")

continue

market = content['data'][0]['label']

if markets:

# if a list of wanted markets is provided, this checks whether

# the update is relevant or not

if not market in markets:

# print(f"Update received but for the wrong market: {market}")

continue

# if the WS event type is correct [offer-updated], the event_id is

# for one of the games of interest & finally the market is included in the list of

# wanted markets, then the below section is executed

outcomes = content['data'][0]['outcomes']

print(f"New odds update for '{market}'")

for outcome in outcomes:

print("Line:", outcome['line'])

print("Outcome:", outcome['label'])

print("Price:", outcome['oddsDecimal'])

if not outcome == outcomes[-1]:

print()

print("Awaits more updates...")

print()

except websockets.WebSocketException:

# if there's a problem with the connection it breaks the while loop

# and closes the connection [could easily be adjusted to reconnect instead]

print_exc()

break

except Exception:

print_exc()

await ws.close()

except:

print_exc()Description of the above stream function:

The function takes four parameters.

URI for the web server. Easily found in the web dev tools, double click the right websocket in the Networks tab and copy pasta the address.

league_id. We’ve collected a couple of league_ids in our dictionary in draftkings_class.py, if you’d like to add in more leagues, well, just do it.

event_ids. A list of event ids for the games you’re interested in. To simplify the process of finding those, we’ve added a get_event_ids [code below] method to the DraftKings class which returns both the games and their event ids.

import requests def get_event_ids(self) -> dict: """ Finds all the games & their event_ids for the given league :rtype: dict """ event_ids = {} response = requests.get(self.pregame_url).json() for event in response['eventGroup']['events']: event_ids[event['name']] = event['eventId'] return event_idsmarkets. A list containing the markets of relevancy, e.g. [‘Moneyline’, ‘Total’].

As soon as the function is called, it sets up a connection [the initial WS “handshake”] with the DraftKings server and feeds it the necessary subscription information, exactly as before.

To allow for a “never-ending” stream of new bet offer updates, an infinite while loop is utilized. At each iteration it awaits a new message and the moment it arrives it proceeds by processing the content. When it’s finished, it continues the wait for new messages.

Note: Ctrl + C terminates a running Python script. ‘Twas unfortunately learnt the hard way.

Okay, a new message has arrived and needs to be handled:

Check #1 is to confirm that it’s an updated offer since that’s the only messages we care about. If not, more or less: ignore the message, continue the while loop, i.e. await new, more informative messages.

Check #2: examine whether the event_id is included in the list of event_ids. If not, again ignore the message and await new ones instead.

Check #3: make sure the odds data is for one of the markets we’re interested in, else skip the message and, just like before, stay toon’d for new ones.

If *all three checks* are successful it means that the message is an odds update for an event_id in our event_ids list and a market in our markets list. Hence in this case we’d like to have the function print the details of the update, which it indeed does.

If an unexpected problem arise during execution, the except blocks inform about it and end the program.

Practical usage

To call our freshly built stream function from inside our DraftKings class [remember, we want to keep all the available methods for our scraper at one place for improved usability], we add a live_odds_stream method to it. All it does is more or less to import and call the stream function from our draftkings_stream.py file with the correct parameters.

from draftkings_stream import stream

def live_odds_stream(self, event_ids=None, markets=None):

"""

Sets up the live odds stream by calling the async stream function with given parameters

:param event_id list: If a list of event_ids is specified [else it's None], the stream/listener considers updates

only if they're updates for those particular games

:param markets list: If a list of markets is specified [else markets == None], the stream/listener considers updates

only if they're updates for those particular markets

Hint: If uncertain about market names, run it for a minute for all markets and collect the correct

names of the markets this way

"""

asyncio.run(stream(

uri=self.uri, league_id=id_dict[self.league], event_ids=event_ids, markets=markets))Well, at this point it’s indeed getting exciting.

The get_event_ids function is working as intended.

The tough one, the stream function, is complete.

The DraftKings class has been made compatible with the stream function.

Thus, testing time!

Opening the draftkings_script.py, made for *usage* of our code, and feeding and running it with the below lines of code should print all the event ids for the NBA games currently listed at DraftKings.

from draftkings_class import DraftKings

"""

Create a DraftKings class object

"""

dk = DraftKings(league="NBA")

"""

Find all games & their event_ids

"""

game_ids = dk.get_event_ids()

for game, event_id in game_ids.items():

print(game, event_id)Great success! Output below.

WAS Wizards @ BKN Nets 28353246

LA Lakers @ NO Pelicans 28353247

LA Clippers @ NY Knicks 28353252

PHO Suns @ DET Pistons 28353250

MIA Heat @ MIL Bucks 28353255

HOU Rockets @ OKC Thunder 28353253

POR Trail Blazers @ CHI Bulls 28353260

DAL Mavericks @ GS Warriors 28353869

ATL Hawks @ DEN Nuggets 28353262

ORL Magic @ CHA Hornets 28360285

CLE Cavaliers @ IND Pacers 28360286This was the easy one though.

Onto the real test, the test for the live odds scraper!

Let’s restrict ourselves to the Moneyline markets for the two live NBA games [both currently in the 4th quarter] and try to set up a stream containing all the updates for those two events. Complementing the draftkings_script.py script with two more lines of code,

"""

Set up a stream awaiting odds updates for the Moneyline market

"""

dk.live_odds_stream(

event_ids=["28353246", "28353247"], markets=['Moneyline'])solves this for us.

Runtime!

Indeed, executing the code sets up the connection → subscribes to the DraftKings live odds feed → awaits new messages → filters out the information we’re interested in → handles it → prints the processed result in the console.

And the most important part: everything is working as expected.

Beautiful!

The output during the first 45 seconds below.

Connection established!

Subscription succeeded, awaits new odds updates...

New odds update for 'Moneyline'

Line: None

Outcome: LA Lakers

Price: 2.4

Line: None

Outcome: NO Pelicans

Price: 1.57142858

Awaits more updates...

New odds update for 'Moneyline'

Line: None

Outcome: WAS Wizards

Price: 1.74074075

Line: None

Outcome: BKN Nets

Price: 2.05

Awaits more updates...

New odds update for 'Moneyline'

Line: None

Outcome: WAS Wizards

Price: 1.71428572

Line: None

Outcome: BKN Nets

Price: 2.1

Awaits more updates...

New odds update for 'Moneyline'

Line: None

Outcome: WAS Wizards

Price: 1.68965518

Line: None

Outcome: BKN Nets

Price: 2.15

Awaits more updates...

New odds update for 'Moneyline'

Line: None

Outcome: WAS Wizards

Price: 1.95238096

Line: None

Outcome: BKN Nets

Price: 1.8

Awaits more updates...Use the scraper

A fully functioning, properly built DraftKings scraper available within 45 seconds? Yep!

Clone it into your directory with

git clone https://github.com/BowTiedBettor/DraftKings.gitthen simply head over to the draftkings_script.py file and do your thing.

Conclusion

The toughest part of the project, by far, has now been completed. Since the methods responsible for pulling and handling data for both pregame- and live markets have been finished, what remains is to add in some extra methods that are of high practical value, such as sending email notifications and dumping content into Excel files. As soon as this last step has been completed, the scraper will be finished and the project finalized. See you in part 3!

Finally, we close off today’s post with a BR-reminder and a tweet regarding the Betfair API.

BR-reminder: As a reader of this Substack you should have completed BR at least once [your own identity] at this point or you are falling behind. >$5 000 to earn by clicking some buttons and you are still sleeping on it?

Until next time…

"Promising career at high frequency trading firm." Made me laugh. Perfect mix of meme content and information.